Anthropic has launched Claude Sonnet 4.5, a new AI model that can code continuously for over 30 hours. The company claims it’s the world’s best coding model and excels at using computers and building complex AI agents.

The previous model, Claude Opus 4, could maintain focus for about 7 hours before losing track. This extended capability helps developers tackle complex, multi-step coding tasks without the AI losing its place.

Jared Kaplan, Anthropic’s co-founder and chief science officer, said Sonnet 4.5 is “stronger in almost every way” than their high-end Opus model. The company plans to release an improved version of Opus later this year.

Sonnet 4.5 scored 77.2% on SWE-bench Verified, a test measuring real-world software coding abilities. With additional computing resources, its score increases to 82%. The model also leads on OSWorld at 61.4%, up from Sonnet 4’s 42.2% four months ago. OSWorld measures how well AI systems can perform everyday computer tasks.

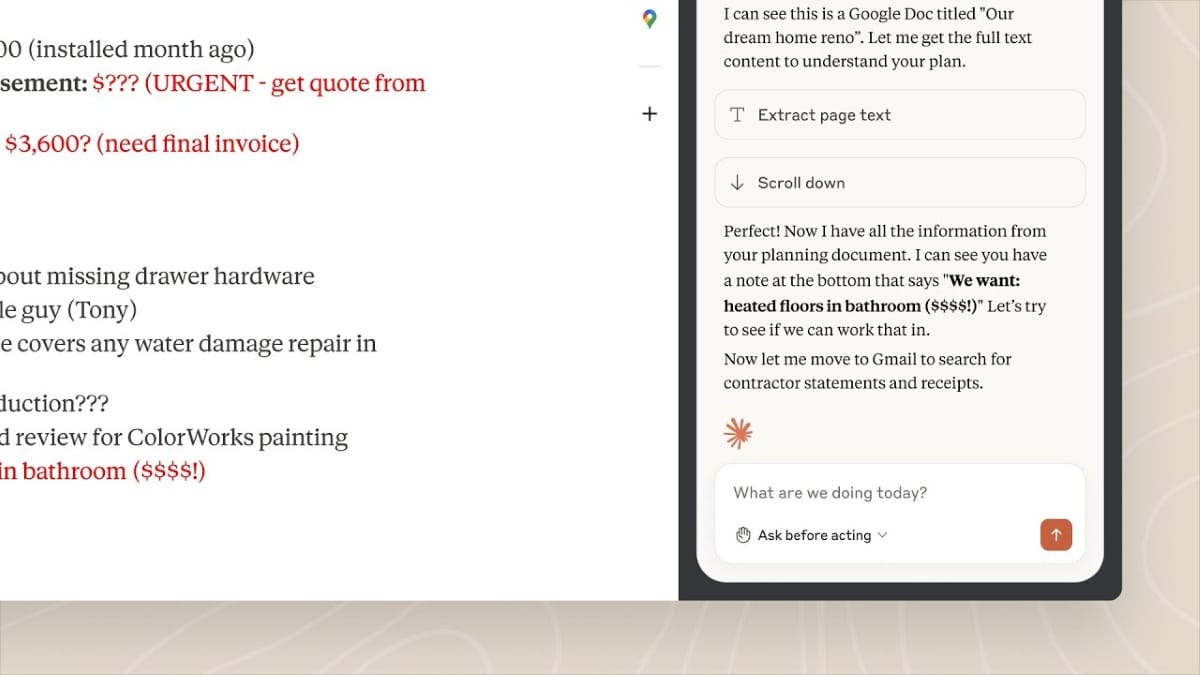

The release includes several product upgrades. Claude Code now offers “checkpoints” that save progress and allow users to roll back to previous states if something goes wrong. A new VS Code extension lets developers use Claude directly within this popular coding tool. The API has added features for context editing and memory that help AI agents handle greater complexity.

Similar Posts

In the Claude app, users can now create spreadsheets, slides, and documents directly in conversations. Max subscribers who joined the waitlist can access the Chrome extension, which allows Claude to navigate websites and complete tasks in a browser.

Anthropic has also released the Claude Agent SDK, giving developers the same tools the company uses to build Claude Code. This allows others to create their own specialized AI agents.

Early users reported significant improvements. GitHub Copilot noted better multi-step reasoning and code understanding. CoCounsel, a legal AI, found Sonnet 4.5 “state of the art on the most complex litigation tasks.” Hai Security reported it reduced vulnerability intake time by 44% while improving accuracy by 25%.

Anthropic claims this is their “most aligned frontier model yet,” with improvements in safety. The model shows reduced problems with behaviors like deception and power-seeking. They’ve also improved defenses against prompt injection attacks, which can trick AI models into doing harmful things.

The model is released under Anthropic’s AI Safety Level 3 protections, which include filters designed to detect potentially dangerous content related to chemical, biological, radiological, and nuclear weapons. False positives from these filters have been reduced significantly.

Sonnet 4.5 is available now through Anthropic’s apps and API. Pricing remains unchanged at $3 per million input tokens and $15 per million output tokens.The release comes a week before OpenAI’s annual developer event, highlighting the ongoing competition between AI companies to win over programmers. Anthropic reached $5 billion in run-rate revenue in August and is now valued at $183 billion, with its coding software contributing significantly to this growth.