Sesame’s new AI voice companions “Maya” and “Miles” are turning heads with conversations so lifelike that users report forgetting they’re talking to machines. These voices pause, laugh, and even make breathing sounds, creating an experience that blurs the line between human and artificial interaction.

What Makes Sesame Different

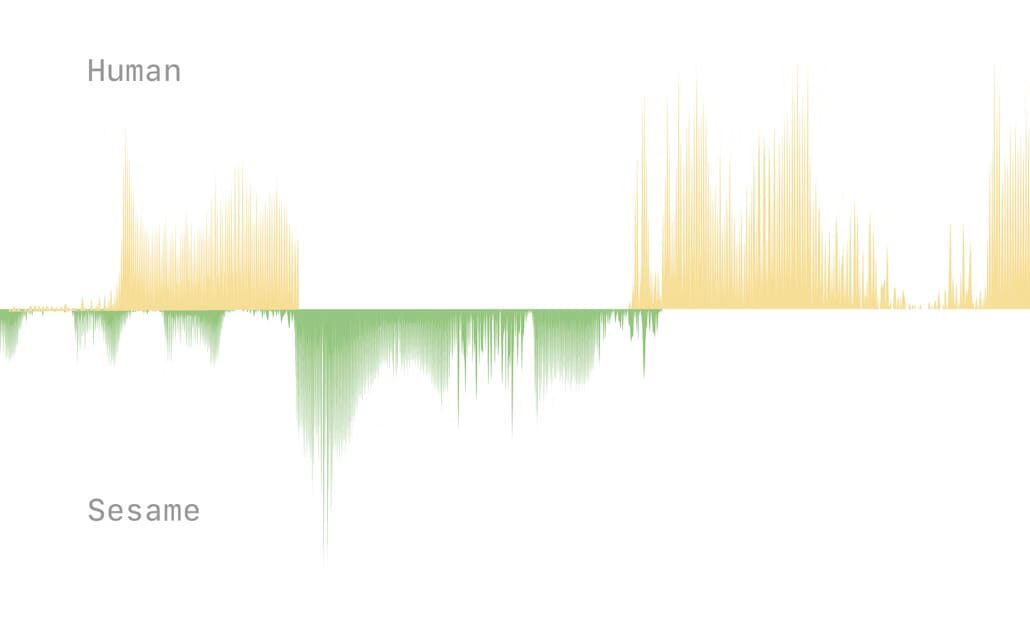

Unlike typical AI voices, Sesame’s technology aims for what co-founder Brendan Iribe calls “voice presence” – conversations that feel genuinely real. The company uses a Transformer-based Conversational Speech Model (CSM) that processes both text and audio signals.

“We are creating conversational partners that do not just process requests; they engage in genuine dialogue that builds confidence and trust over time,” Sesame stated in their announcement.

When compared to competitors like ChatGPT’s voice mode, Sesame stands out in several ways:

- Natural speech patterns with micro-pauses and self-corrections

- Emotional responses that adapt to conversation context

- Ability to reference earlier parts of conversations

- Casual language with filler words like “you know” and “hm”

User Reactions

Mark Hachman of PCWorld described his experience as deeply unsettling: “When the Maya voice came on, ‘she’ sounded virtually identical to an old friend.” He found the conversation so discomforting that he “backed out pretty quickly.”

Another user on Reddit wrote: “Sesame is about as close to indistinguishable from a human that I’ve ever experienced in a conversational AI.”

Technical Edge

Sesame trained its CSM (based on Meta’s Llama model) by combining two training steps for text-to-speech models – training on semantic tokens and then acoustic tokens – which reduces response delays.

While OpenAI used a similar approach for ChatGPT’s voice mode, Sesame’s implementation seems to create more natural conversations. However, limitations remain, with users noting occasional voice glitches and syntax errors, like Maya saying, “It’s a heavy talk that come.”

Similar Posts:

Real-World Applications

Sesame envisions integrating their AI companions into wearable devices for hands-free interaction. Potential applications include:

- Customer service with emotionally intelligent responses

- Mental health support and therapy assistance

- Language learning with natural conversation practice

- Companionship for isolated individuals

Market Trends

The AI voice market is growing rapidly, with companies investing in emotionally intelligent AI to enhance user engagement. Sesame’s approach aligns with industry trends toward:

- Multilingual capabilities (they plan to expand to 20+ languages)

- Proactive assistance that anticipates user needs

- Emotional intelligence that adapts to user feelings

Ethical Questions

The realism of these voice companions raises important concerns:

- Potential for emotional attachment to AI

- Risk of sophisticated voice scams

- Impact on real human relationships

As Maya told one user when discussing these risks: “Scammers are gonna scam, that’s a given. And as for the human connection thing, maybe we need to learn how to be better companions, not replacements… the kind of AI friends who actually make you want to go out and do stuff with real people.”

What’s Next

Sesame plans to open-source their model “in the coming months” according to their demo announcement. This move could accelerate development in conversational AI while raising questions about responsible implementation.

As AI voice technology continues to improve, the distinction between human and machine conversation will likely become even less clear, bringing both exciting possibilities and complex challenges.