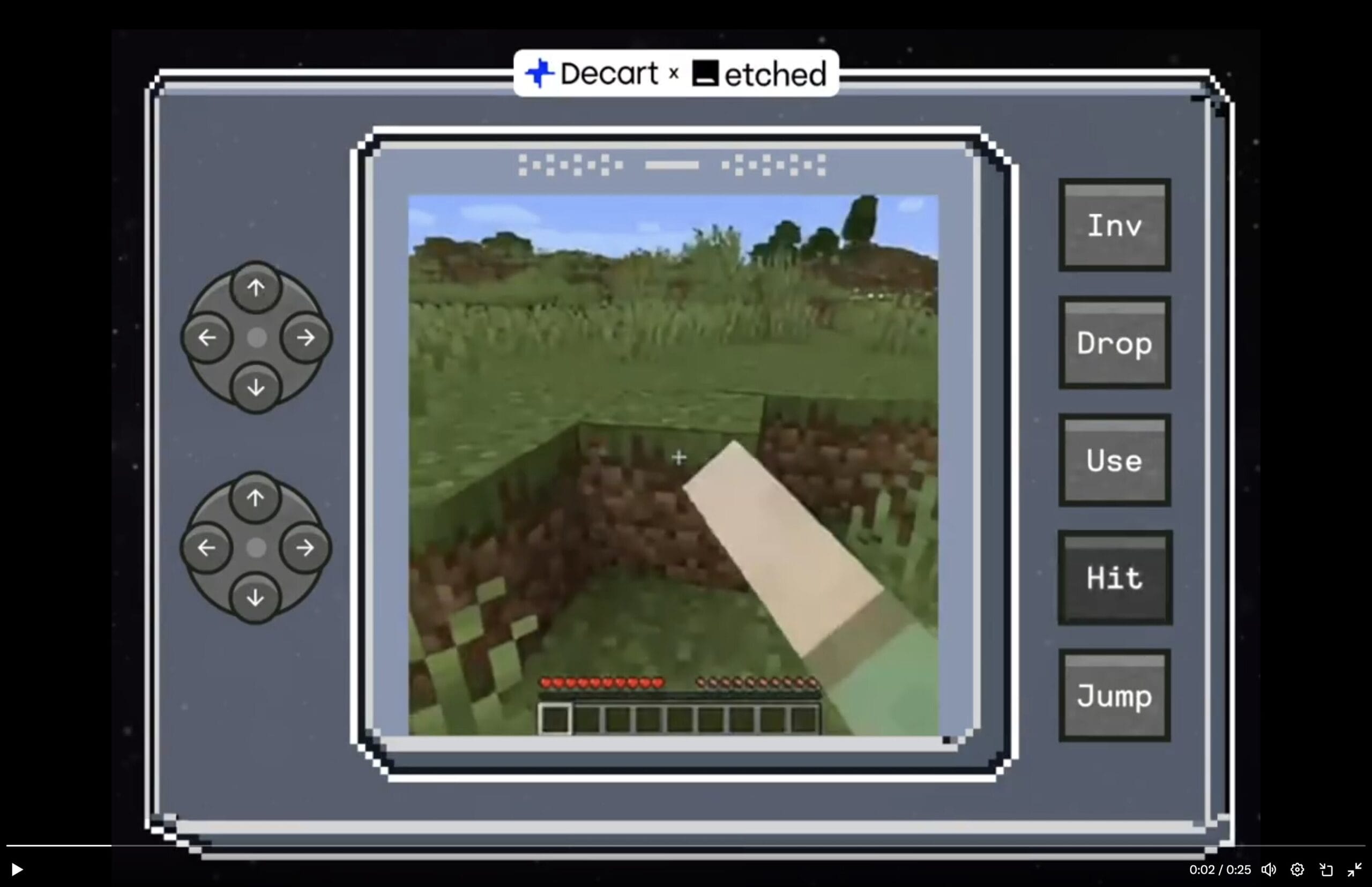

Israeli AI startup Decart dropped their $21M-funded project Oasis into the wild today – a real-time AI that learned to generate Minecraft-style worlds by watching gameplay footage. The tech stack? Neural nets all the way down: spatial autoencoders paired with latent diffusion backbones running on transformer architectures. Translation: this thing builds playable 3D environments on the fly without a traditional game engine.

Running on Nvidia H100 GPUs, Oasis takes your keyboard and mouse inputs and spits out each frame in real-time. The current demo output is pretty low-res and has some quirks – turn around and your carefully built structures might decide to play musical chairs. But that’s just the alpha version running on today’s hardware.

“Our game-changing platform maximizes AI training efficiency for organizations. Doubling down with this new funding and our technical expertise, we unlock possibilities that were once only imagined. We have recently achieved a breakthrough in real-time video generation which we will share with you soon. This is only the beginning,” explains Decart CEO Dean Leitersdorf.

The technical specs get interesting when you dig in. The model architecture combines spatial understanding (for maintaining coherent environments) with frame-by-frame generation (for responding to player input). It’s all optimized for minimal latency – crucial for anything calling itself a game.

Etched is developing their own AI accelerator chips called Sohu that they claim will be several times faster than Nvidia’s current GPUs The goal? Pushing this tech to handle 4K gameplay.

The demo launch nearly melted their servers – “hundreds of thousands of people” tried to access it in the first 24 hours. Most got turned away while the team scrambled to handle the load. Seems like the gaming community is hungry for AI-generated worlds.

One elephant in the room: copyright. The model trained on Minecraft footage, but there’s no word on whether Microsoft gave the thumbs up. Similar projects like Readyverse Studios‘ Promptopia (launched October 2024) are also pushing into AI-generated gaming content.

Similar posts

The tech parallels early Google – while others maxed out expensive Sun servers, Google squeezed more juice from consumer hardware. Decart’s taking a similar approach with AI inference optimization. Their distributed systems work and low-level performance tweaking could give them the same kind of exponential cost advantage.

Looking under the hood, Oasis represents several bleeding-edge AI developments converging: transformer models handling spatial-temporal relationships, diffusion models generating high-quality visual output, and optimization techniques making it all run in real-time. The system architecture prioritizes memory management and low latency above all else.

Future versions aim to add text and voice commands, better physics simulation, persistent environments, and multi-user support. The tech could potentially generate any game style, not just Minecraft clones. For the ML nerds: they’ve open-sourced the code and model weights on GitHub.

But what makes this particularly interesting is how it flips traditional game development. Instead of hand-crafting every asset and coding every interaction, the AI learns the rules by watching gameplay. It’s pattern matching and generation at a massive scale, running fast enough to feel responsive.

Decart mentioned in a company blog post, “Simply imagine a world where this integration is so tight that foundation models may even augment modern entertainment platforms by generating content on the fly, or new possibilities for the user interaction such as textual and audio prompts guiding the gameplay (e.g., “imagine that there is a pink elephant chasing me down”).

The demo’s rough edges show how far the tech still needs to go for AAA gaming. But for prototyping, indie development, and pushing the boundaries of what’s possible with real-time AI? That’s already happening. The code’s out there. The models are running. The only question is: what will developers build with it?