Nvidia announced its latest Blackwell chips are leading industry benchmarks for training large language models (LLMs), delivering more than double the performance of previous generation chips. This advancement helps companies build AI systems faster and more efficiently.

In the latest MLPerf Training benchmarks (round 12), Nvidia’s Blackwell platform showed impressive results across all test categories. Most notably, it was the only submission for the benchmark’s toughest test: training the massive Llama 3.1 405B model.

“We’re still fairly early in the Blackwell product life cycle, so we fully expect to be getting more performance over time,” said Dave Salvator, Director of Accelerated Computing Products at Nvidia, during a press briefing.

The performance jump is significant. On the Llama 3.1 405B test, Blackwell chips delivered 2.2 times better performance than previous-generation Hopper chips when using the same number of processors. For the Llama 2 70B fine-tuning benchmark, Blackwell showed 2.5 times more performance.

Before Blackwell, Nvidia’s Hopper architecture (H100 chips) dominated AI training. These chips were used to train many of today’s popular AI models, but the growing size and complexity of new models demanded more power.

While Nvidia leads the AI chip market, competitors do exist. AMD offers MI300X accelerators, and several startups like Cerebras, Graphcore, and SambaNova have developed specialized AI chips. However, none submitted results for this round of MLPerf benchmarks, highlighting Nvidia’s current technical advantage.

Similar Posts

Nvidia’s dominance stems from more than just powerful chips. The company has built a complete ecosystem including specialized software (CUDA-X libraries, NeMo Framework), networking infrastructure, and system designs. This integrated approach helps Nvidia maintain its leadership position despite competition.

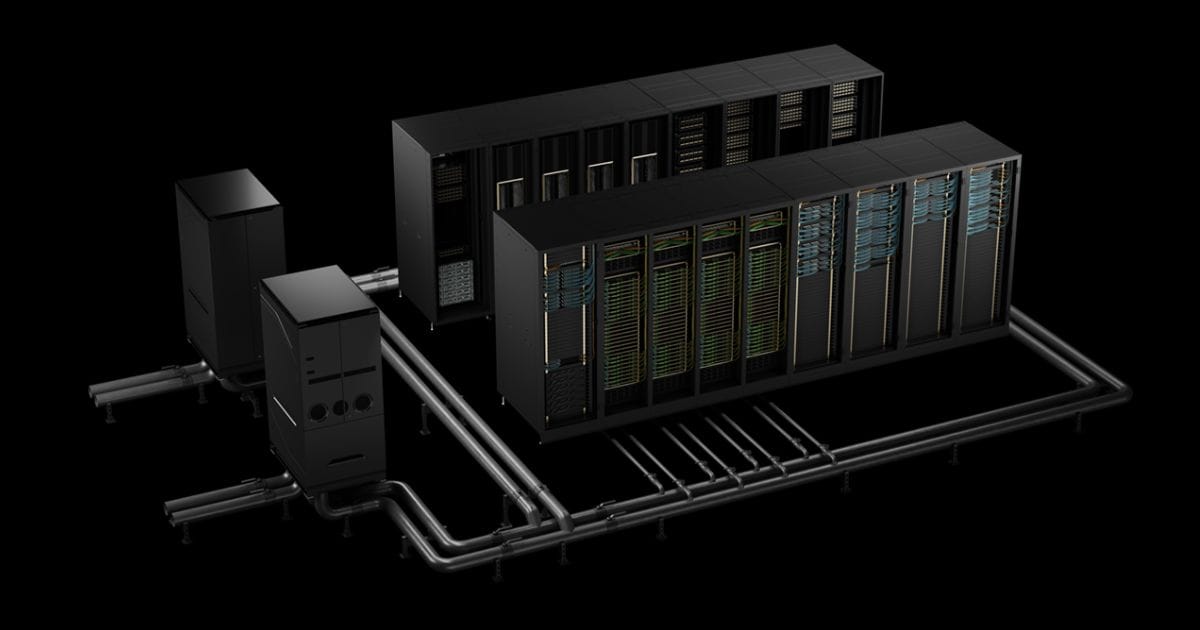

For the benchmarks, Nvidia used two AI supercomputers powered by Blackwell: Tyche (using GB200 NVL72 rack systems) and Nyx (using DGX B200 systems). In collaboration with CoreWeave and IBM, they also submitted results using 2,496 Blackwell GPUs and 1,248 Nvidia Grace CPUs—the largest MLPerf Training submission ever recorded.

The Blackwell architecture’s improvements include high-density liquid-cooled racks, 13.4TB of coherent memory per rack, fifth-generation NVLink and NVLink Switch interconnect technologies, and Quantum-2 InfiniBand networking. These technical advancements help AI companies train ever-larger models more quickly.

Faster training times matter because they let companies develop new AI models more rapidly. What once took months can now be completed in weeks or even days, accelerating innovation across industries from healthcare to transportation.

Nvidia’s partners in this benchmark round included ASUS, Cisco, Giga Computing, Lambda, Lenovo, Quanta Cloud Technology, and Supermicro, showing broad industry adoption of their technology.

As Jensen Huang, Nvidia’s CEO, has emphasized, the company has evolved “from being just a chip company to not only being a system company with things like our DGX servers, to now building entire racks and data centers” that function as “AI factories.”

This approach has helped Nvidia capture approximately 80% of the AI chip market, making it a crucial supplier for companies building generative AI applications.